Problems:

Single Host (Containers impacting other containers)

Auto healing is a process where without users’ manual intervention containers start by themselves (but it doesn’t happen in Docker)

Containers can go down and there are hundreds of reasons for this.

- Auto-scaling (Two options: Increase containers automatically or Increase Manually) Load Balancer is a must

Docker doesn’t provide enterprise-level support

Docker is Simple and Minimilatic and doesn’t support these features:

Problems:

Single Host

Load Balancer

Firewall

Autoscale

Auto heal

API Gateway

and so on (These are enterprise-level std)

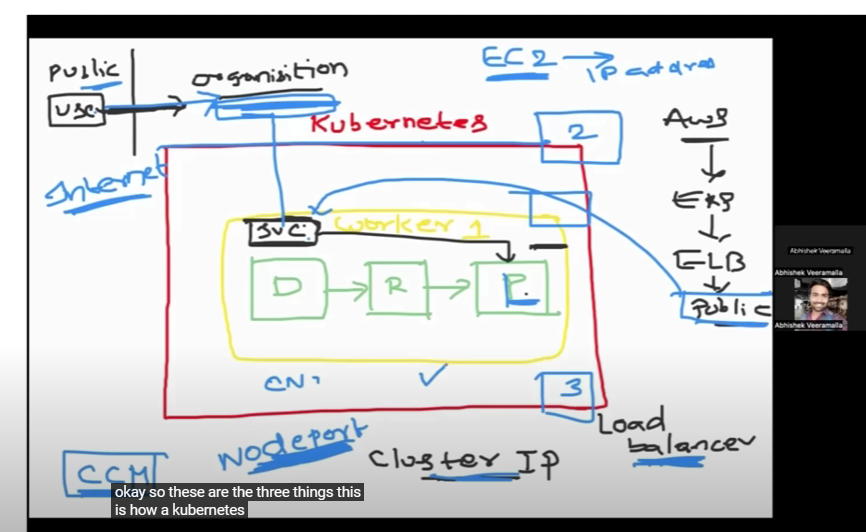

K8s is a cluster which is a group of nodes and is in master node architecture.

Replica sets

HPA (Horizontal Pod Auto Scaler)

Even before the container goes down KS’s will roll out a new container. K8’s is a part of borg. Docker is not used independently at an enterprise level. K8’s doesn’t support advanced load-balancing capabilities. Networking is mandatory for running pod.

Ingress controller:

Woker node:

Kubelet: Responisble for creation of pod.

Kube-proxy: Networking (Genreting IP add)

Container runtime: Running container.

(Master Node) Control Plane:

Core components:

API server

Schedular is receiving info from API server

etcd (Key-valuestore)

Controller Manager: Autoscaling, Replica set

Cloud Controller Manager:

Docker swarm

Installation 2 Easy Steps:

Install Minikube

Install kubectl (Helps in interacting with Kubernetes command line so that we can interact with K8’s cluster)

Hypervisor serves for creating a virtual server on top of VM.

Multiple Clusters in Minikube. We can also set different configurations and increase the memory as well. Minikube supports a lot of add-ons like:

- Ingress Controller 2) Operation Life Cycle Manager etc

End Goal: Deploy applications in Containers.

Instead of the command line in Docker, we use YAML files in K8s

Pod: Is one (most of the time) or group of containers

Advantages: (of putting a group of containers in a single pod)

Shared Network

Shared Storage

Why do we create multiple containers?

Suppose you have an application that is dependent on other applications without which it cannot run, or you have a container (in which you have written your API Gateway rules, load balancing rules, and sidecar container rules in such cases we can put them in a single pod.

You can access the applications inside the containers using the pod cluster IP addresses. IP addresses are not generated for the containers, but they are generated for the pods.

Pod -> wrapper -> life of DevOps Engineer Easy

Kubeproxy is generating the cluster IP address. You can create hundreds of K8s clusters on kind but not on Minikube

How Minikube create a cluster?

It will create a single-node Kubernetes cluster.

How do you debug pods or how do you debug applications issues in Kubernetes?

kubectl describe pod nginx

kubectl logs nginx

If you can deploy container as a container in K8s using a pod what is the purpose of using the deployment?

Pod is somewhat equivalent to container because a pod is just providing a YAML specification of running container.

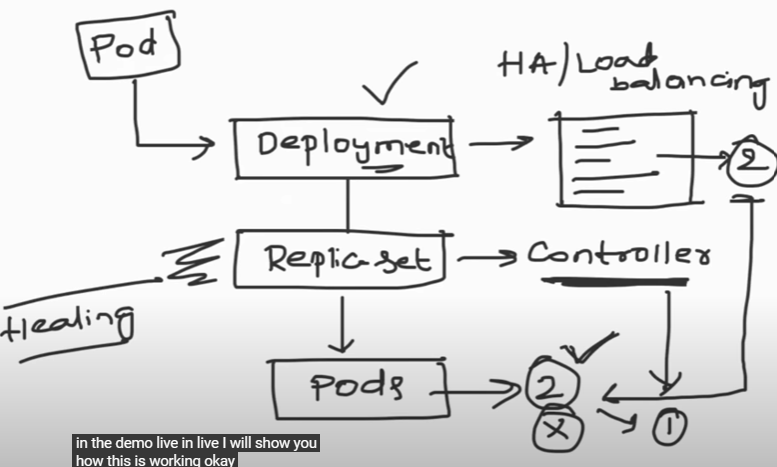

Deployment offers Auto healing and Auto scaling.

kubectl Cheat Sheet | Kubernetes

Thank you so much everyone for your overwhelming support on my last post.

Controller ensures that desired state and actual state on the cluster are same.

Replica set is a K8s feature that is implementing the auto healing feature of pods. If a pod is getting killed or is a deployment says that increase the pod by one this is all done by replica set

Day 3 Kubernetes

Commands:

kubectl get pods

kubectl get deploy

kubectl delete deploy nginx-deployment

kubectl get all

How do you list out all the resources that are available in a particular namespace? kubectl get all

And if for all the namespaces: kubectl get all -A

ls

deployment.yml pod.yml

vim pod.yml

kubectl apply -f pod.yaml

kubectl get pods

kubectl get pods -o wide

minikube ssh

curl 172.17.0.3

https://kubernetes.io/docs/concepts/workloads/controllers/deployment/

vim deployment.yml

kubectl apply -f deployment.yml

kubectl get deploy

kubectl get pods

Deploy -> ReplicaSet -> pod

kubectl get deploy

kubectl get rs

kubectl get pod

kubectl get pods -w

Zero Deployment

Kubernetes Controller is nothing but a GO language application that K8’s which will ensure a specific behavior is implemented.

What if there was no such feature of service in K8's?

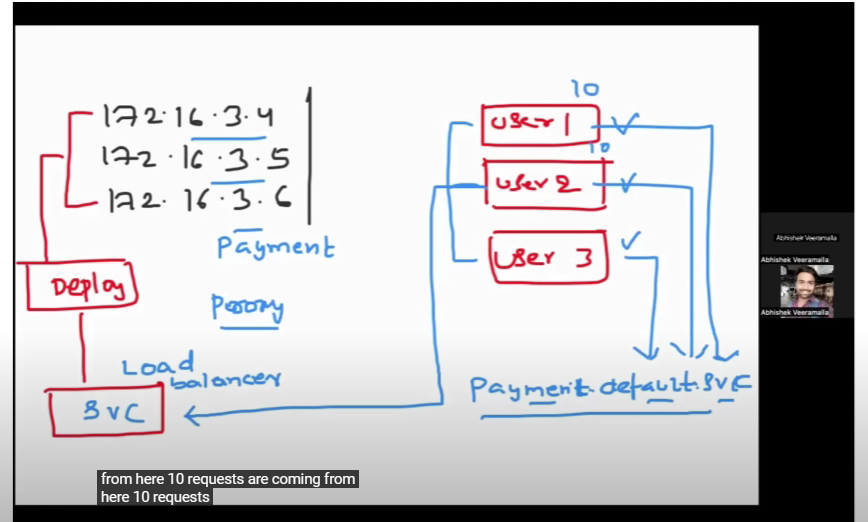

Why do we need service? Load Balancer

Ideal pod size Depends upon the number of concurrent users or requests one replica of the application can handle.

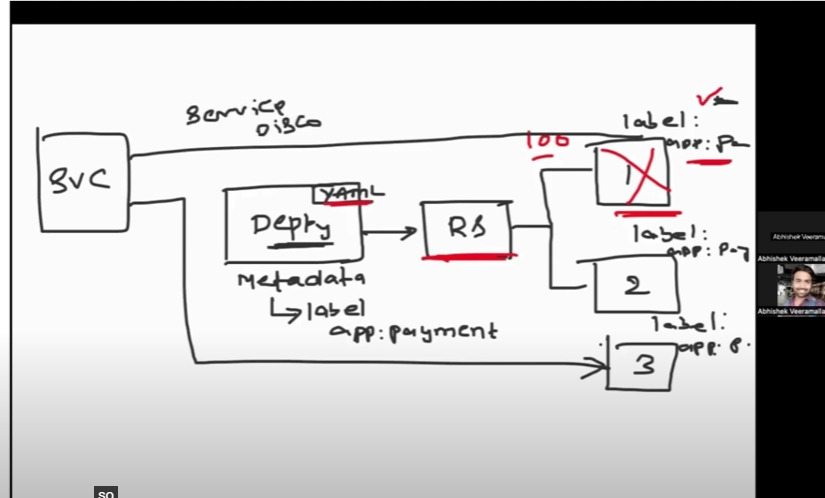

Labels and Selectors: For every pod that is getting created developers will apply a label and it will be common for all the pods because the replica set controller will deploy a new pod with the same YAML that it got this is auto-healing.

If a service is keeping track of pods using labels instead of IP address and the label is always the same = Problem solved (This is the service discovery Mechanism of K8s)

Services:

Load Balancing

Service Discovery

Expose to external world. (Outside K8s cluster)

YAML:

1. Cluster IP (Inside K8s cluster) Advantages: Discovery Load balancing

2. Node port: Inside organization who have access to a worker node

3. Load balancer: Service access to the external world

If you create a load balancer service type on your cloud provider there will be an elastic load balancer IP address which is a public IP address using which you access the application.

Commands:

kubectl get pods

kubectl get deploy

kubectl delete deploy nginx-deployment

kubectl get all

How do you list out all the resources that are available in a particular namespace

And if for all the namespaces: kubectl get all -A

ls

deployment.yml pod.yml

vim pod.yml

kubectl apply -f pod.yaml

kubectl get pods

kubectl get pods -o wide

minikube ssh

curl 172.17.0.3

https://kubernetes.io/docs/concepts/workloads/controllers/deployment/

vim deployment.yml

kubectl apply -f deployment.yml

kubectl get deploy

kubectl get pods

Deploy -> ReplicaSet -> pod

kubectl get deploy

kubectl get rs

kubectl get pod

kubectl get pods -w

Zero Deployment

Kuberntes Controller is nothing but a GO language application tha K8’s which will ensure a specific bhevaior is implemented.

What if there was no such feature of service in K8's?

Why we need service? Load Balancer

Ideal pod size: Depends upon number of concurrent users or number of requests one replica of the application can handle.

Labels and Selectors: Every pod that is getting created developers will apply a label and it will be common for all the pods becasue the replica set contoller will deploy a new pod with the same yaml that it got this is auto healing.

If a service is keeping track of pods using labels instead of IP address and label is always the same = Problem solved (This is the service discovery Mechanism of K8s)

Services:

Load Balancing

Service Discovery

Expose to external world. (Outside K8s cluster)

YAML:

1. Cluster IP (Inside K8s cluster) Advantages: Disocvery Load balancing

2. Nodepoort: Inside oorg who have acces to worker node

3. Load balancer: Service access to external world

If you create load balancer service type On your cloud provider there will be an elastic load balancer IP address which is a public IP address using with you access application

AWS-> EKS -> Elastic Load Balncer -> Public IP address

Whoever wants to access the pods can access from public IP address. Basically who has access to internet.

Question and Answers:

Q. 1) What is the difference Docker and Kubernetes?

Ans: Docker is a container platform where as Kubernetes is a container orchestration environment that offers capabilities like Auto healing, Auto Scaling, Clustering and Enterprise level support like Load balancing.

Q. 2) What are the main components of Kubernetes architecture?

On a broad level, you can divide the kubernetes components in two parts

1. Control Plane (API SERVER, SCHEDULER, Controller Manager, C-CM, ETCD)

2. Data Plane (Kubelet, Kube-proxy, Container Runtime)

Q. 3) What are the main differences between the Docker Swarm and Kubernetes?

Kubernetes is better suited for large organisations as it offers more scalability, networking capabilities like policies and huge third party ecosystem support.

resources inside the Pod so that’s the only difference between a pod

Q. 4) What is the difference between Docker container and a Kubernetes pod?

A pod in kubernetes is a runtime specification of a container in docker. A pod provides more declarative way of defining using YAML and you can run more than one container in a pod.

Q. 5) What is a namespace in Kubernetes ?

In Kubernetes namespace is a logical isolation of resources, network policies, rbac and everything. For example, there are two projects using same k8s cluster. One project can use ns1 and other project can use ns2 without any overlap and authentication problems.

description here so that if you want you can copy this description and you can

Q. 6) What is the role of kube proxy?

Kube-proxy works by maintaining a set of network rules on each node in the cluster, which are updated dynamically as services are added or removed. When a client sends a request to a service, the request is intercepted by kube-proxy on the node where it was received. Kube-proxy then looks up the destination endpoint for the service and routes the request accordingly.

Kube-proxy is an essential component of a Kubernetes cluster, as it ensures that services can communicate with each other.

Q. 7) What is a namespace in Kubernetes?

In Kubernetes namespace is a logical isolation of resources, network policies, rbac and everything. For example, there are two projects using same k8s cluster. One project can use ns1 and other project can use ns2 without any overlap and authentication problems.

description here so that if you want you can copy this description and you can

Q. 8) What is the role of kube proxy?

Kube-proxy works by maintaining a set of network rules on each node in the cluster, which are updated dynamically as services are added or removed. When a client sends a request to a service, the request is intercepted by kube-proxy on the node where it was received. Kube-proxy then looks up the destination endpoint for the service and routes the request accordingly.

Kube-proxy is an essential component of a Kubernetes cluster, as it ensures that services can communicate with each other.

Q. 9) What are the different types of services within Kubernetes?

There are three different types of services that a user can create.

1. Cluster IP Mode 2. Node Port Mode 3. Load Balancer Mode

Q. 10) What is the role of Kubelet ?

Kubelet manages the containers that are scheduled to run on that node. It ensures that the containers are running and healthy, and that the resources they need are available.

Kubelet communicates with the Kubernetes API server to get information about the containers that should be running on the node, and then starts and stops the containers as needed to maintain the desired state. It also monitors the containers to ensure that they are running correctly, and restarts them if necessary.

Q. 11) Day to Day activities on Kubernetes

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-python-app

labels:

app: sample-python-app

spec:

replicas: 2

selector:

matchLabels:

app: sample-python-app

template:

metadata:

labels:

app: sample-python-app

spec:

containers:

— name: sample-python-app

image: abhishekf5/python-sample-app-demo:v1

ports:

— containerPort: 80

apiVersion: v1

kind: Service

metadata:

name: python-django-sample-app

spec:

type: NodePort

selector:

app: sample-python-app

ports:

— port: 80

targetPort: 80

nodePort: 30007

Why Ingress came into the picture?

Load Balancing mechanism the service was providing a simple round robin load balancing.

Example: If you are doing 10 requests with this specific service using kubeproxy. It will send file requests to pod number one and it will send five request to pod number 2.

Enterprise load balancers can offer hundreds of features.

Static public load balancers IP addresses and static load balancing IP addresses cloud providers charge heavily money on this.

Problems with Kubernetes that Virtual Machine didn’t have:

1. Enterprise & TLS Load Balancing:

Sticky sessions

TLS (secure https)

Path

Host

Ratio based.

Interview Question (What is the difference between load balancer type service and the traditional K8s ingress)

Load balancing service was great, but it was missing all the above capabilities, and the cloud provider will charge you for each and every load balancer service type.

2. Load balancer (Cloud provider charging)

How ingress is solving?

Openshift routes which is simialr to K8s ingress (K8s distribution)

Top load balancers people were using in VMs are:

nginx

f5

Traffic

HA proxy.

As a user you’ll create an Ingress resource. K8s doesn’t support the logic of all in K8s master or the API server instead the companies (here nginx) so the nginx company will write nginx ingress controller and as a K8s user on the required K8s cluster you will deploy the Ingress controller. You can deploy that using Helm charts you can deploy that using YAML manifest. Once the deployment is done the developer will create ingress YAML survey recourse for their K8s services.

Instead of just creating an ingress resouce you also have to deploy ingress controller.

Ingress Controller is a load balancer + API Gateway

Problems ingress solved:

1. Enterprise -Security -Load balancing

2. Service -IP addresses

Ingress is nothing without Ingress Contoller

https://kubernetes.io/docs/concepts/services-networking/ingress/

Set up Ingress on Minikube with the NGINX Ingress Controller

*An Ingress is an API object that defines rules which allow external access to services in a cluster. An Ingress…*kubernetes.io

MetalLB, bare metal load-balancer for Kubernetes

Instead of just creating an ingress resouce you also have to deploy ingress controller. Ingress Controller is a load balancer + API Gateway

Problems ingress solved:

1. Enterprise -Security -Load balancing

2. Service -IP addresses

Ingress is nothing without Ingress Contoller

https://kubernetes.io/docs/concepts/services-networking/ingress/

https://kubernetes.io/docs/tasks/access-application-cluster/ingress-minikube/

https://tldp.org/

https://www.vmware.com/in.html

https://opensource.com/

https://www.geeksforgeeks.org/linux-file-hierarchy-structure/

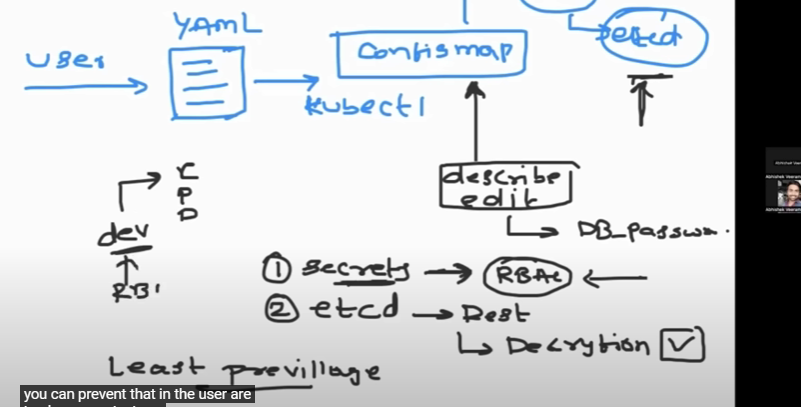

Config map is solving the problem of storing the information/data and that can be later used by the application or pod or deployment.

Why need the secret in K8s?

Secret solves the same problem but it deals with sensitive data

In K8s whenever you create a resouce this info gets saved in etcd. In etcd the sata is usually saved as objects.

Any hacker who tires to retrive info from etcd they can do that and if they retrieve info of DB username DB password this means the entire platform is compromised.

Basically (non-sensitive data store it in Config maps) & (sensitive data store it in Secretes)

Secret:

Encrpyt the data at the rest (before the object is stored in etcd k8s will encrpt

User-> Config maps (YAML file) -> kubectl apply -> Config map cretaed -> API -> etcd

Commands:

vim cm.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: test-cm

data:

db-port: “”3306"

kubectl apply -f cm.yml

kubectl get cm

kubectl describe cm test-cm

git remote -v

ls

vim deployment.yml

kubectl apply -d deployment.yml

kubectl get pods -w

env | grep db

DB-PORT=3306

kubectl apply -f deployment.yml

kubectl get pods -w

kubectl get pods

How will you use your config maps inside your K8s pod?

Container doesn’t allow changing the environemt variable. In production you cannot restart the containers.

Use Vloume Mounts (we use them as files instead of env)

Create Diffferent types of Vloumes in K8s

RBAC- Role-Based Access Control (Related to security)

Users:

Service Accounts:

Roles:

Cluster Role:

Role Binding:

CRB:

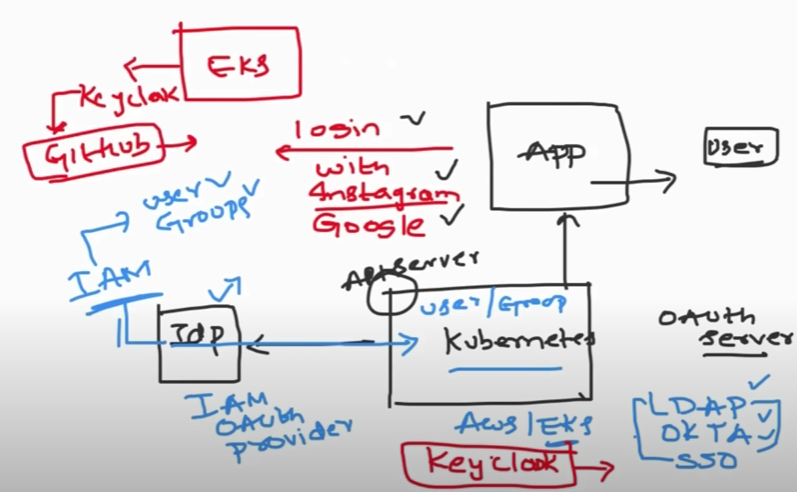

K8s doesn’t deal with user management

At the time of compliation of primitive data types are assigned memory it is called as static prgramming and when primitive data types are assigned memory on runtime it is dynamic memory

K8s offloads user-mgmt to identity providers.

Service Account gets created automatically

Service Account <- Role Binding -> Role

K8s has inbuilt API server which exposes a lot of metrices.

Prometheus stores info in a time series DB

Using Helm add the helm repo

When you call the kube state matrix on the matrix endpoint it would give you a lot of info about your existiing K8s cluster and this info is beyond the info the k8s api server is providing.

CRD: A yaml file which is used to introduce a new type of API to K8s and that will have all the fields a user can submit in the custom resource

CR:

Custom Controller:

2 Actors:

Devops: Deploying the custom rescource defintion and the custom rescource defintion and the custom controller is the is the responsibilty of the devops enginner

Users: Deploying the custom resource can be the action of the user or action of the devOps Enginner

Extend API of K8s

1 Primary Group

15 Secondary Groups

Every new file is owned by user’s primary gorup

Users can belong to multiple secondary groups.